On April 8, 2025, NVIDIA launched Llama3.1Nemotron Ultra253B, an open-source model optimized from Llama-3.1-405B. Boasting 25.3 billion parameters, it surpasses Meta's Llama4Behemoth and Maverick, becoming a focal point in the AI field.

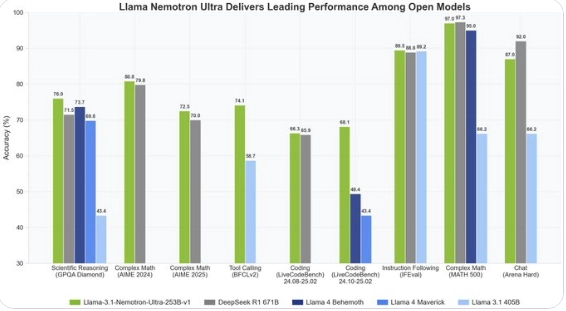

This model demonstrates exceptional performance in benchmarks like GPQA-Diamond, AIME2024/25, and LiveCodeBench, achieving four times the inference throughput of DeepSeek R1. Through optimized training and algorithms, NVIDIA proves that efficient design allows smaller models to rival their giant counterparts.

Llama3.1Nemotron Ultra253B makes its weights available through HuggingFace under a commercially friendly license. This move by NVIDIA not only benefits developers but also fosters broader application and ecosystem growth for AI technology.

Challenging trillion-parameter models with a significantly smaller parameter count, this model embodies the "less is more" philosophy. It may prompt the industry to reconsider the parameter race and explore more sustainable AI pathways.

Its high performance and flexibility are suitable for code generation, scientific research, and other fields. As developers delve deeper into its use, this model is poised to drive even greater change in 2025.

For developers, this model provides a high-performance, low-barrier experimental platform; for businesses, its commercial license and efficient characteristics translate to lower deployment costs and broader application scenarios. From code generation and scientific research to natural language processing, this model's versatility promises to revolutionize multiple sectors.

Address:https://huggingface.co/nvidia/Llama-3_1-Nemotron-Ultra-253B-v1