In the field of AI-powered image generation, Diffusion Models (DMs) are transitioning from Unet-based architectures to Transformer-based architectures (DiT). However, the DiT ecosystem still faces challenges in plugin support, efficiency, and multi-conditional control. Recently, a team led by Xiaojiu-z introducedEasyControl, an innovative framework designed to provide efficient and flexible conditional control for DiT models, acting like a powerful "ControlNet" for DiT.

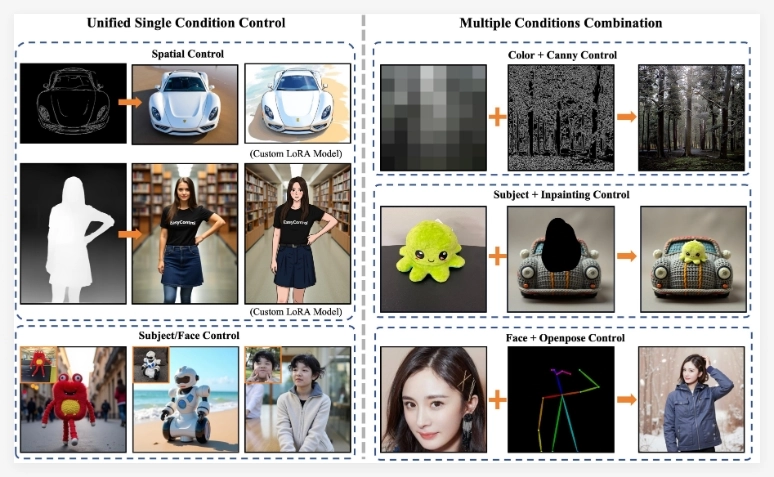

EasyControlis not a simple model stacking, but a carefully designed unified conditional DiT framework. Its core advantages lie in its introduction of alightweight Condition Injection LoRA module, aPosition-Aware Training Paradigm, and the combination ofCausal AttentionandKV Cache technology, achieving significant performance improvements. These innovative designs make EasyControl excel inmodel compatibility(plug-and-play, style-preserving control),generation flexibility(supporting multiple resolutions, aspect ratios, and multi-conditional combinations), andinference efficiency.

One of the most striking features ofEasyControlis its powerfulmulti-conditional control capabilities. Its codebase shows support for various control models, including but not limited toCanny edge detection,depth information,HED edge sketches,image inpainting,human pose (analogous to OpenPose), andsemantic segmentation (Seg).

This means users can precisely guide the DiT model to generate images with specific structures, shapes, and layouts by inputting different control signals. For example, Canny control allows users to specify the outline of the generated object; pose control can guide the generation of images with specific human actions. This precise control significantly expands the application scenarios of DiT models.

Beyond basic structural control,EasyControlalso demonstrates powerfulstyle transfer capabilities, particularly inGhibli style conversion. The research team trained a dedicated LoRA model using only100 real Asian facesandGhibli-style corresponding images generated by GPT-4. Surprisingly, this model can convert portraits into classic Ghibli animation style whilepreserving original facial features well. Users can upload portrait photos and use appropriate prompts to easily generate artistic works with a strong hand-drawn anime style. The project team also provides a Gradio demo for users to experience this functionality online.

TheEasyControlteam has already released the inference code and pre-trained weights. According to its Todo List, future releases will includespatial pre-trained weights,subject pre-trained weights, andtraining code, further enhancing EasyControl's functionality and providing researchers and developers with more comprehensive tools.

The emergence ofEasyControlundoubtedly injects powerful control capabilities into Transformer-based diffusion models, effectively addressing the shortcomings of DiT models in conditional control. Its support for multiple control modes and impressive Ghibli style transfer capabilities suggest broad application prospects in the AI content generation field. With its efficient, flexible, and user-friendly features,EasyControlis poised to become an important component of the DiT model ecosystem.

Project Link: soraor.com